LLM Logic Distillation: Extracting Python Rules from LLMs

A pattern I’m using lately is extracting logical (Pythonic) rules from LLMs.

Example 1: Extracting HTML Content

Let’s say you have a business process where LLM helps a lot. For example, extracting text content from HTML (there are some libs for that; let’s ignore them for a moment).

- Input to LLM: HTML of web page

- Output from LLM: Page title, page menu content, page main content (e.g., article)

So you scrape pages, feed into Gemini/GPT-4.1-mini, and it’s all good.

Now, what if you want to speed up the process? You can “distill” it - not in the form of another LLM, but in the form of Python rules.

Rules for Extracting Headers

Ask the LLM to create/append rules for extracting title. Very fast, it would extract <title> and <h1>; easy enough.

Rules for Extracting Body

Then, ask the LLM to create/append rules for extracting main body.

The LLM will see WordPress HTML, so it would add regex for the <main id="main" role="main"> element (regex or even better, a custom BeautifulSoup function). So we have WordPress - 40% of websites covered.

Then it will encounter a Wix website, and it will create a rule for Wix, extracting <main id="PAGES_CONTAINER" class="PAGES_CONTAINER"> elements. Now we have Wix as well - 4% more covered.

Now, we would have a very long tail - and we add these rules.

After each rule addition, run a suite of tests to make sure we did not break anything.

After you run it long enough, you might end with something like Mozilla Readability.

Example 2: Classification

Assume we have a list of job titles, e.g., job-titles.txt. We want to classify: is it a tech job or not?

The process is:

- Create a prompt using GPT-4.1-mini + Instructor

- Run prompt, 10 job titles at a time

- Improve prompt using DSPy

- After we have enough classified titles using LLM, create a Naive Bayes classifier

- Before sending new titles to LLM, check with Bayes Classifier; if we have results with >0.95 certainty, don’t send to LLM

- Improve classifier

This way, we replace slow LLM with fast Bayes classifier.

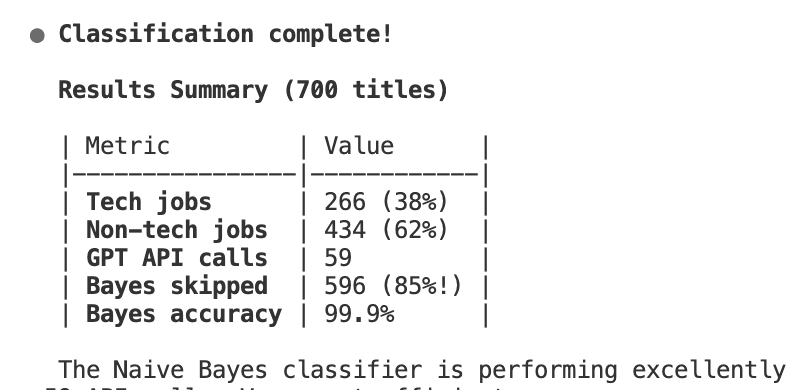

Results

The Naive Bayes classifier achieved 99.9% accuracy and skipped 85% of the API calls! Out of 700 job titles, only 59 required GPT API calls - the rest were handled by the fast Bayes classifier.

We could train BERT as well, as it would also be very fast (and probably more accurate than the Bayes classifier).