Expert Trajectory RAG: Claude Code-Level Quality with Internal Models

How to achieve Claude Code-level quality using internal coding models?

Disclosure: This approach is for specific scenarios and requires significant pre-computation (tokens).

When to Use This

This is for you if:

- You have code you cannot share with public models; AND/OR

- You must work locally; AND

- You want SOTA results (assume SOTA = Claude Code)

Solution TL;DR

Assume you want to install “Langfuse” locally, and you can’t use Claude Code/ChatGPT/public models. The flow is basically RAG over thousands of possible scenarios:

- Ask Claude Code: “How to install Langfuse locally?”

- Save the markdown file

- Introduce change request: “How to change ports of services?”

- Ask Claude Code to fix and validate

- Save tutorial with metadata:

- Keywords:

ports,mapping,dockers - Description: “changing ports of services”

- Keywords:

- Introduce change request: “How to use ELK with this setup?”

- Ask Claude Code to relate, save tutorial:

- Keywords:

logs,monitoring,devops - Description: “Using ELK for logs”

- Keywords:

- Rinse and repeat

- Push everything into RAG

- Let your internal model search over the very detailed instructions

What This Enables

- Map your existing technologies

- Create thousands of such tutorials

- Given a problem, search thousands of working, accurate, verified tutorials

Tutorial Structure

Each tutorial should have:

- Basic keywords and short description of the problem

- This enables efficient RAG + keyword-based hybrid search over problem solutions

Q&A

Q: How to prevent Information Leakage?

A: Assume you have a system using Python 2.7 with a very specific library for some proprietary task. If you ask Claude: “I’m using Python 2.7 with lib XYZ, please help me fix bug ABC” - this is information leakage. The solution is to mass-generate thousands of solutions to virtually all possible combinations. Your actual query gets lost in the noise.

Q: Legal stuff? Is model distillation illegal?

A: Distilling models for creation of new models is illegal under major LLMs TOS. However, creating a RAG Library (“Expert Trajectory RAG”) is not illegal AFAIK.

Q: Why do we need keywords AND description for each scenario?

A: Hybrid search often gives better results than just vector search.

Q: Will this work in real life?

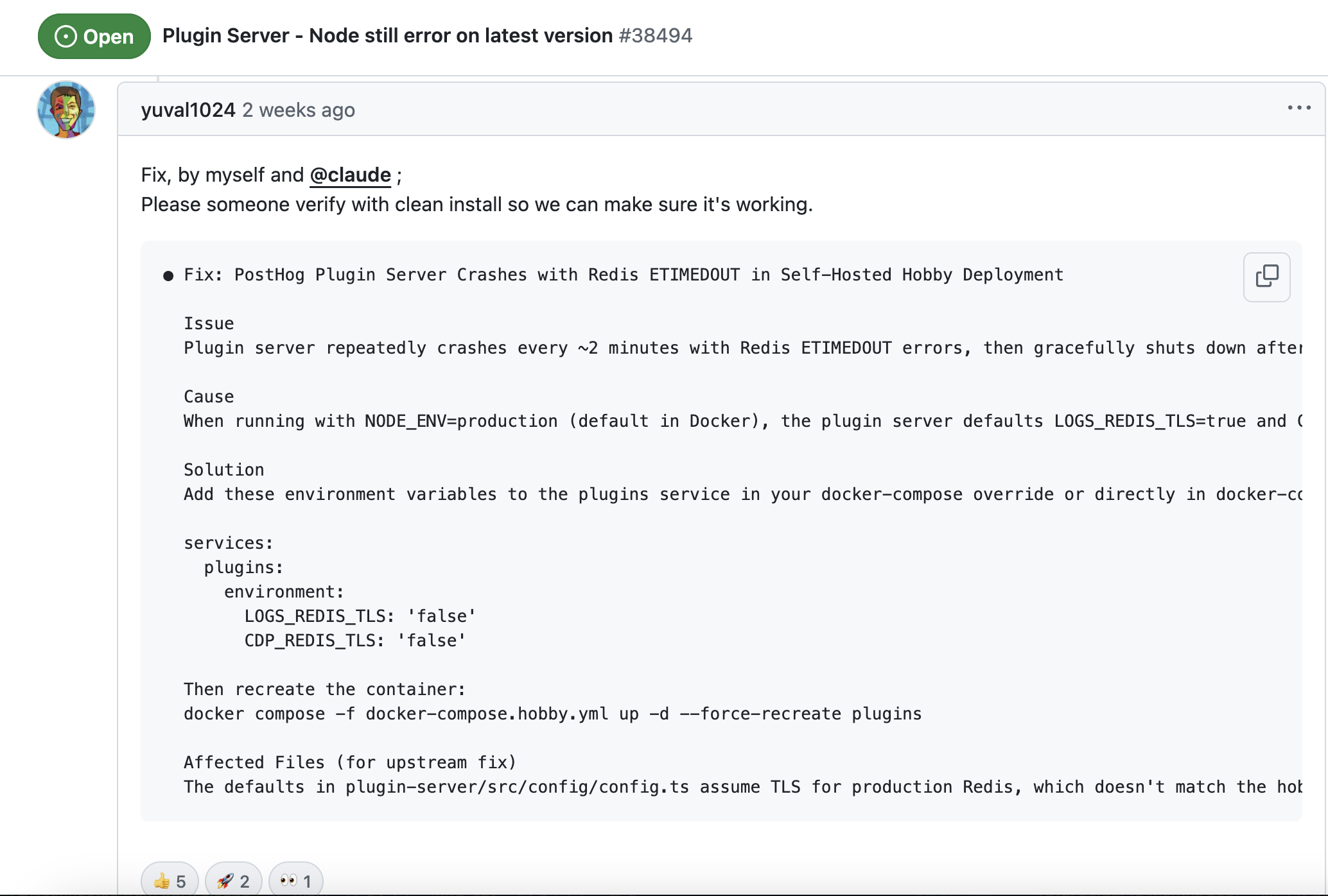

A: Yes. See this real-life example: PostHog Issue #38494. I installed PostHog locally, and Claude Code fixed an issue. It wasn’t 100% autonomous - it was partly instructed by me on how to progress. But with some simple agentic loops, I believe this can be fully autonomous.